New Deepfake Technique Can Make Portraits Sing

Deepfake is a term which was initially coined in the year 2017. It is a technique that utilizes an AI to project combined videos and images onto source images through machine learning. Now, a fresh report from the Imperial College in London and Samsung’s AI research lab in the UK have showcased how a simple image and audio file can be used to make a singing video of a portrait.

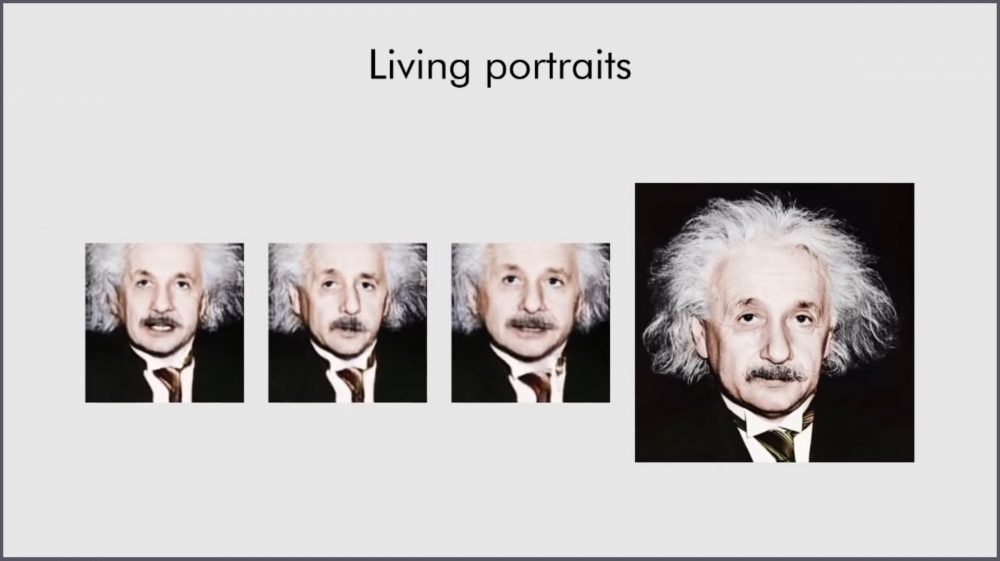

Previously, the technique was used to produce life-like videos from still shots. The researchers are heavily employing machine learning to generate realistic looking results. A trained eye can easily notice the mechanical and almost scary imitation by the AI but it is still a great deal considering the amount of data actually needed is negligible. The technique can even be seen combining a portrait of Albert Einstein, the famous mathematician, to create a unique lecture.

On a more entertaining side of things, even Rasputin can be seen singing Beyoncé’s iconic “Halo” with comical results. Furthermore, realistic examples are also available with tweaked videos which were generated to mimic human emotions based on the audio that was input into the system. It has become remarkably more simplified with time to produce deepfakes, even if they are not commercially available.

Also Read: Samsung Galaxy Note 10 Will Boast Of A Three Stage Camera Aperture System

However, people are being reasonably worried regarding the implications of the technique. This can potentially be used to spread large scale false information and propaganda using famous personalities as their templates by those that seek to gain from it. The US legislators have already started noticing the future complications that can potentially be faced. Deepfakes have already caused harm, especially for women, who have had fake pornography surfaced to create an embarrassing spectacle for them. Fortunately or unfortunately, it is still too early to say how much good or harms the technology might end up causing.